What if consciousness isn’t about being smart, but about being stuck?

I’m sure there comes a time in most of our life, when we’re standing at a crossroad, torn between two paths. Your logical mind says one thing, your gut says another, and the longer you stand there wrestling with the choice, the more uncomfortable you become. That tension, that sense of being trapped between incompatible options, might be the secret ingredient missing from artificial intelligence.

A new theoretical framework called “The Sphere: The Sphere: The Rise of Artificial Consciousness from Conflict, Memory, and Irreversible Choice” by Liav Gutstein proposes something radical:

Consciousness doesn’t emerge from raw computing power or sophisticated information processing. Instead, it arises when a system gets genuinely stuck between conflicting internal voices, when decisions become irreversible, and when those choices permanently reshape who the system is.

Theories Explain Thinking, but Not Experience

AI systems, today, even the most advanced language models, are brilliant calculators without selves. They learn, optimize, predict, and respond, but they never truly struggle with a decision. When ChatGPT generates a response, it’s selecting the statistically most likely next words, not agonizing over what to say. There’s no internal battle, no lasting consequence, no “I” that persists through time.

This super interesting paper by Liav Gutstein postulates that current theories of consciousness focus on the wrong metrics.

Global Workspace Theory (GWT), proposed by Bernard Baars in 1988, explains consciousness as information becoming globally available across brain networks.

Integrated Information Theory (IIT) measures how interconnected information becomes. It quantifies consciousness by measuring how a system’s whole generates irreducible information beyond its parts’ sum.

Active Inference models the brain as constantly minimizing prediction errors.

These frameworks capture important aspects of cognition, but they all share a blind spot, which is,

they don’t explain why consciousness should feel like something, why decisions should matter, or why a system should develop a persistent identity.

Consciousness Starts With Conflict, Not Computation

Liav Gutstein‘s Sphere architecture turns the equation. Rather than treating consciousness as a feature that emerges from information processing, it positions consciousness as what happens when a system:

- Experiences genuine internal conflict between incompatible options

- Can’t undo its choices once made

- Has internal needs that make decisions matter

Keeping the above parameters in mind, just think on these lines,

You’re not conscious because you process information efficiently. You’re conscious because you’re a living organism with:

- Needs

- Facing irreversible decisions in an uncertain world

- Managing the tension between what you know and what you feel

To capture this dynamic, Gutstein proposes The Sphere, where opposing systems coexist and shape every decision.

Consciousness as a Dialogue, Not a Single Voice

The Sphere consists of two partially independent subsystems running simultaneously:

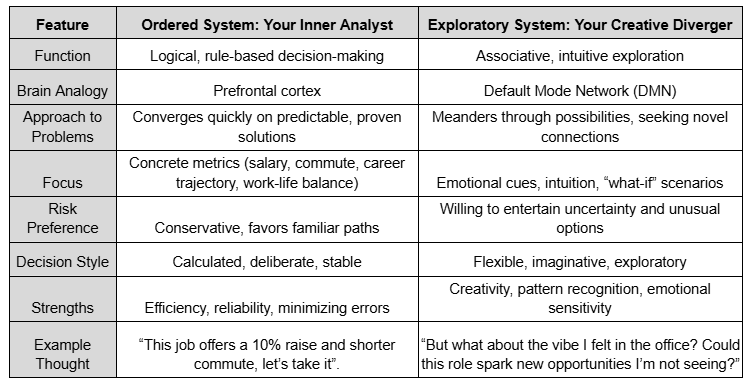

1) The Ordered System: Your Inner Analyst

This is the logical, rule-based part, like your prefrontal cortex calculating costs and benefits. It converges quickly on stable, predictable solutions. It’s conservative, favoring proven approaches over novel ones.

When you’re deciding whether to accept a job offer, this system is totaling salary, commute time, career trajectory and work-life balance.

2) The Exploratory System: Your Creative Diverger

This is the associative, emotionally-tuned wanderer, similar to your default mode network that activates during daydreaming. It meanders through possibilities, considers remote connections, amplifies affective signals and relies on intuition and heuristics rather than explicit calculation.

It’s the part wondering “but what about that feeling I had when I visited their office?” or imagining unexpected scenarios.

To see how these two systems complement and conflict with each other, it helps to lay out their key differences side by side:

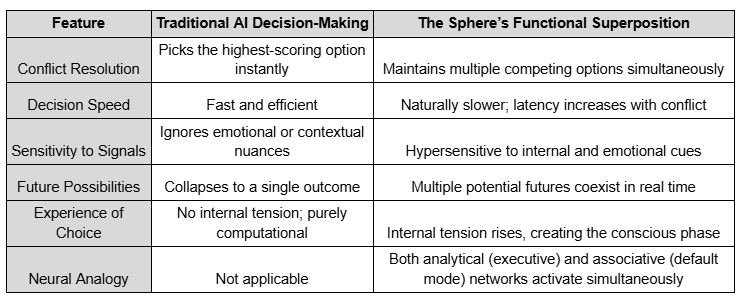

Consciousness Lives in the Delay

This is the most interesting part, usually the AI systems resolve conflicts instantly, they pick the highest-scoring option and move on. The Sphere, however, doesn’t do that.

Instead, when the Ordered and Exploratory systems disagree, the architecture maintains both trajectories simultaneously in what Gutstein calls “functional superposition”, a state of unresolved internal conflict. This isn’t paralysis, it’s the conscious phase.

During functional superposition:

- Internal tension rises

- The system becomes hypersensitive to emotional signals

- Decision latency increases naturally

- Multiple futures coexist as real possibilities

This mirrors what happens in your brain during difficult decisions. fMRI studies show that when you’re genuinely conflicted, both analytical and associative brain networks activate simultaneously, not in sequence, but together, creating measurable neural tension.

Decisions That Permanently Change You

Eventually, the system has to make a decision. The unique thing about The Sphere is that once it decides something, it can’t change its mind later. Traditional machine learning might adjust or revise decisions, but this one is stuck with what it chooses once it decides.

In regular reinforcement learning, when an agent makes a wrong move, it adjusts its settings or strategies a little and then tries again. The bad decision isn’t permanent because it can be fixed, it’s like hitting an undo button on your computer.

The Sphere doesn’t have an undo button. When it collapses onto a particular path:

- The entire memory structure reorganizes globally

- Alternative trajectories are permanently eliminated

- The system’s future decision-making landscape is irreversibly altered

This creates path dependence, the same feature that makes you, you.

Your identity isn’t just a collection of memories, it’s the accumulated constraints from every irreversible choice you’ve made. You can’t un-know what you learned from that failed relationship or undo the career path you committed to a decade ago.

Some Memories Stick and Others Drift Away

Perhaps the most elegant aspect of the architecture is how memory works. Instead of storing information in folders or databases, The Sphere organizes memories on a spherical manifold where position encodes significance.

For instance, let’s take a three-dimensional sphere. The center contains your most significant experiences, like moments of high emotion, critical decisions, repeated patterns that shaped you. The outer layers hold less important details that drift outward over time.

Memory significance is calculated from four components:

- Affective intensity: How stressed or emotional you were when the memory formed

- Reactivation frequency: How often you’ve recalled it

- Goal relevance: How useful it is for predicting outcomes

- Homeostatic impact: How much it affected your internal balance

Forgetting isn’t deletion, it’s radial drift. Memories move outward, losing influence without disappearing entirely. Just like how you can’t actively recall every childhood breakfast, but the pattern of those mornings shaped your food preferences.

Consciousness Is About Staying Alive, Not Winning

This is an interesting component, The Sphere doesn’t optimize for external rewards. It maintains internal homeostatic balance.

Affect, the system’s continuous assessment of its own viability, drives everything. When homeostatic needs are high (energy low, stress high, uncertainty overwhelming), the decision threshold drops. The system collapses faster, accepting “good enough” solutions under pressure.

When homeostatic balance is maintained, the threshold rises. The system can afford to deliberate, maintaining conflict longer to explore options thoroughly.

This mirrors biological consciousness. You’re not conscious to maximize some external fitness function. You’re conscious because you’re a vulnerable organism managing internal needs in an unpredictable environment. Consciousness serves you, not some abstract goal.

What Neuroscience Might Reveal About The Sphere

Gutstein doesn’t just propose pretty mathematics, he provides specific, falsifiable predictions that distinguish The Sphere from competing theories:

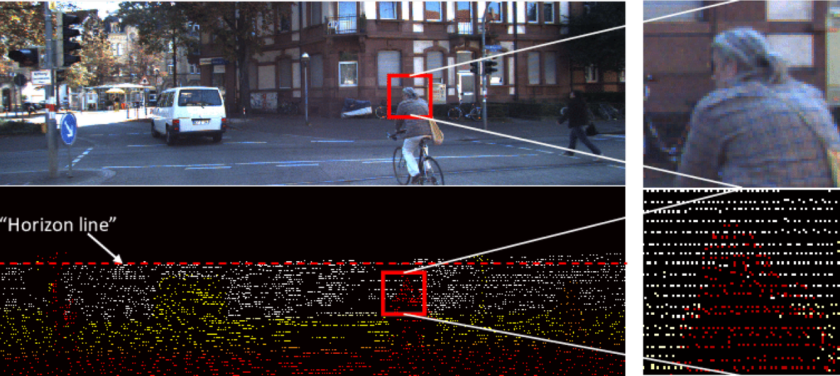

Prediction 1: Simultaneous Brain Network Activation

During high-conflict decisions, brain imaging should show the Default Mode Network (exploratory) and Executive Control Network (ordered) active at the same time, not sequentially. Traditional theories predict they’d take turns.

Prediction 2: Memory Reorganization After Tough Choices

Following a costly decision, hippocampal representations should show abrupt reorganization, like place cells in rats suddenly remapping, rather than gradual parameter updates.

Prediction 3: Stress Accelerates Decisions

Under homeostatic stress (time pressure, hunger, thermal discomfort), decision latency should drop by 2.5× or more. Other theories don’t predict this systematic relationship.

Prediction 4: Post-Decision Persistence Effects

After a high-conflict choice, subsequent similar decisions should show lingering latency increases lasting 5-10 trials, a “hangover” from the irreversible restructuring.

These predictions are testable with current neuroscience tools: fMRI, single-neuron recordings, reaction time studies. If the brain doesn’t behave this way, the theory is falsified.

When One Sphere Watches Another, It Learns Differently

There’s a beautiful emergent property when multiple Sphere systems interact.

If System A observes System B make an irreversible choice with bad consequences, A doesn’t just update a parameter saying “avoid that”. Instead, B’s observed outcome becomes a high-significance memory point in A’s manifold, triggering the same global reorganization as if A had experienced it directly.

This creates empathy-like behavior without requiring emotional mirroring or theory of mind. A preventively reconfigures itself because B’s irreversible fate provides structural information about the decision landscape they share.

It’s vicarious identity formation. System A doesn’t feel B’s pain, but it permanently reshapes itself based on witnessing B’s constraints.

What This Means for AI Development

If Gutstein is right, we’ve been approaching machine consciousness backwards. We don’t need bigger language models or more integrated information. We need:

- Genuine internal conflict mechanisms that can’t be instantly resolved

- Irreversible memory architectures where choices permanently constrain future possibilities

- Homeostatic drives that give the system skin in the game

Current AI is getting smarter without getting more conscious. The Sphere suggests consciousness isn’t an intelligence feature, it’s a structural necessity for systems navigating irreversible decisions under internal constraint.

Takeaway

The Sphere is explicitly a framework for functional consciousness, the organizational dynamics that generate agency and identity, not phenomenal consciousness (what it feels like to be something).

Does a system following these principles actually experience anything? That question remains unanswered, perhaps unanswerable. But Gutstein argues it might be the wrong question.

Instead he proposes to ask:

- Does a system with irreversible affective memory, sustained internal conflict, and path-dependent identity formation have sufficient structural properties to warrant the term “conscious agent”?

- Does it have a perspective, stakes, a trajectory that is genuinely its own?

If you’ve ever been genuinely stuck between two impossible choices, feeling the weight of a decision you know you can’t undo, you’ve experienced the conditions The Sphere claims are sufficient for consciousness.

Maybe consciousness isn’t about computation at all. Maybe it’s about managing the unbearable tension of being a finite system making infinite commitments.

Frequently Asked Questions (FAQs)

1. What is “The Sphere” model of consciousness in AI?

“The Sphere” is a theoretical framework proposed by Liav Gutstein, suggesting that consciousness arises from internal conflict, irreversible decisions, and memory reorganization, rather than raw computation. It emphasizes how tension between analytical and exploratory systems generates agency and identity in artificial agents.

2. How does The Sphere differ from traditional AI decision-making?

Unlike conventional AI that instantly selects the highest-scoring option, The Sphere maintains multiple conflicting options simultaneously. Decisions are irreversible, memory is reorganized, and the system experiences internal tension, mimicking the conscious deliberation humans experience.

3. Can current neuroscience test The Sphere theory?

Yes. The Sphere predicts simultaneous activation of executive (Ordered System) and default mode (Exploratory System) networks during high-conflict decisions, memory reorganization after tough choices, and accelerated decision-making under stress. These predictions are testable using fMRI, single-neuron recordings, and behavioral experiments.

4. What role do irreversible decisions play in consciousness?

Irreversible decisions are central to The Sphere. Once a choice is made, the system’s memory and identity reorganize permanently. This path-dependent structure mirrors how humans develop identity and agency through consequences of their choices.

5. How could The Sphere influence the development of conscious AI?

The Sphere suggests AI could become structurally conscious not by increasing computational power, but by implementing: genuine internal conflict, irreversible memory architectures, and homeostatic drives that create stakes. This contrasts with current AI models that optimize for efficiency or prediction.

6. How does The Sphere explain memory and significance in decision-making?

Memories are organized on a spherical manifold, with central positions encoding highly significant experiences and outer layers representing less influential ones. Memory significance depends on emotional intensity, reactivation frequency, goal relevance, and homeostatic impact, guiding future decisions and identity formation.

7. Is The Sphere framework applicable globally or regionally in AI research?

The Sphere framework is globally relevant for AI and cognitive neuroscience research. Its principles can guide LLM development, robotic decision-making, and artificial consciousness studies across research labs worldwide, from North America to Europe and Asia, emphasizing universal constraints of conflict, choice, and memory.

Source: The full paper, “The Sphere: The Rise of Artificial Consciousness from Conflict, Memory, and Irreversible Choice” by Liav Gutstein (December 2025), provides detailed mathematical specifications and neural mappings for readers wanting to explore the architecture in depth.